Kubernets

简介

kubeadm-单节点-ubuntu

kubeadm-高可用集群-ubuntu

资源管理

Namespace

Pod

Pod控制器

Pod生命周期

Pod调度

Label

Service

数据存储

安全认证

DashBoard

kubeadm-单节点-centos

kubeadm-高可用集群-centos

本文档使用 MrDoc 发布

-

+

首页

kubeadm-单节点-ubuntu

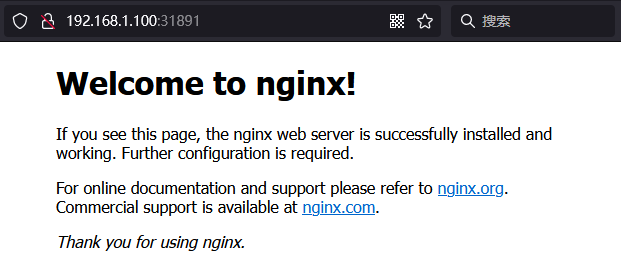

# 架构 | 集群角色 | 主机名 | IP | 系统架构 | | --- | --- | --- | --- | | master主控 | master | 192.168.1.100 | ubuntu-arm | | node节点1 | node1 | 192.168.1.101 | ubuntu-arm | # 环境初始化 VPC网络配置公网IP ``` ip link add name enp0s6 type dummy ip addr add 公网IP/32 dev enp0s6 ip link set dev enp0s6 up ``` ``` ip addr add 公网IP/32 dev enp0s6 ``` 添加解析 ``` cat << EOF >> /etc/hosts 192.168.1.100 master 192.168.1.101 node1 EOF ``` 关闭防火墙 ``` systemctl status ufw systemctl stop ufw systemctl disable ufw ``` 开启时间同步 ``` mv /etc/localtime{,.back} ln -s /usr/share/zoneinfo/Asia/Shanghai /etc/localtime date ``` 关闭swap分区 ``` vim /etc/fstab ``` 流量调度算法设置为fq,TCP拥塞控制算法设置为BBR ``` echo "net.core.default_qdisc=fq" >> /etc/sysctl.conf echo net.ipv4.tcp_congestion_control=bbr >> /etc/sysctl.conf sysctl -p ``` 添加网桥过滤和地址转发功能 ``` cat <<EOF> /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF ``` 重新加载配置 ``` sysctl --system ``` 加载网桥过滤模块 ``` modprobe br_netfilter ``` 查看网桥过滤模块是否加载成功 ``` lsmod | grep br_netfilter ``` # 安装ipvsadm 安装 ``` apt -y install ipvsadm ``` 规则写入配置文件 ``` cat <<EOF >> /etc/modules ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh EOF ``` 立即生效 ``` modprobe ip_vs modprobe ip_vs_rr modprobe ip_vs_wrr modprobe ip_vs_sh ``` 查看是否生效 ``` lsmod | grep ip_vs ``` # 安装NFS 安装 ``` apt -y install nfs-kernel-server ``` 修改配置文件 ``` cat <<EOF >> /etc/exports /nfs *(rw,no_root_squash) EOF ``` 启动 ``` systemctl enable nfs-kernel-server.service systemctl restart nfs-kernel-server.service ``` 查看 ``` showmount -e ``` # 安装docker 添加docker源 ``` apt update ``` ``` apt -y install docker.io ``` 修改cgroups为systemd ``` mkdir -p /etc/docker ``` ``` cat <<EOF > /etc/docker/daemon.json { "exec-opts": ["native.cgroupdriver=systemd"] } EOF ``` ``` systemctl daemon-reload ``` 启动 ``` systemctl enable --now docker ``` 查看状态 ``` systemctl status docker ``` # 集群组件安装 安装依赖环境 ``` apt-get -y install ca-certificates curl software-properties-common apt-transport-https ``` ``` curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add - ``` ``` tee /etc/apt/sources.list.d/kubernetes.list <<EOF deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main EOF ``` ``` apt update ``` 三台机器安装kubeadm、kubelet、kubectl ``` apt -y install kubectl=1.21.0-00 kubelet=1.21.0-00 kubeadm=1.21.0-00 ``` 禁止版本更新 ``` apt-mark hold kubelet kubeadm kubectl ``` # 集群镜像拉取 推荐镜像版本 ``` kubeadm config images list --kubernetes-version=v1.21.0 ``` 添加K8s镜像源 ``` docker pull k8s.gcr.io/kube-apiserver:v1.21.0 docker pull k8s.gcr.io/kube-controller-manager:v1.21.0 docker pull k8s.gcr.io/kube-scheduler:v1.21.0 docker pull k8s.gcr.io/kube-proxy:v1.21.0 docker pull k8s.gcr.io/pause:3.4.1 docker pull k8s.gcr.io/etcd:3.4.13-0 docker pull k8s.gcr.io/coredns/coredns:v1.8.0 ``` 查看镜像 ``` docker images ``` 修改kubeadm启动kubelet时使用的ip,查看kubelet的环境变量文件: ``` systemctl cat kubelet.service | grep EnvironmentFile ``` kubeadm官方说第一个文件是自动生成的,不建议修改。因此我们修改第二个(不存在则创建) ``` vim /etc/default/kubelet ``` ``` KUBELET_EXTRA_ARGS=--node-ip=<指定公网IP> ``` # Master初始化 初始化集群配置文件 ``` cat <<EOF> kubeadm-init.yaml apiVersion: kubeadm.k8s.io/v1beta2 kind: InitConfiguration bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication localAPIEndpoint: advertiseAddress: "0.0.0.0" #公网 IP bindPort: 6443 --- apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.21.0 #确保使用与你的kubeadm版本兼容的Kubernetes版本 controlPlaneEndpoint: "0.0.0.0:6443" #你的公网IP和端口 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes networking: dnsDomain: cluster.local podSubnet: "10.244.0.0/16" serviceSubnet: 10.96.0.0/12 etcd: local: dataDir: /var/lib/etcd extraArgs: listen-client-urls: https://0.0.0.0:2379 listen-peer-urls: https://0.0.0.0:2380 apiServer: timeoutForControlPlane: 4m0s controllerManager: {} dns: {} scheduler: {} EOF ``` 在master节点执行,初始化 ``` kubeadm init --config=kubeadm-init.yaml ``` ``` kubeadm init --config=kubeadm-init.yaml --ignore-preflight-errors=ImagePull ``` 在master节点执行,设置Kubernetes配置文件 ``` mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config ``` # 自定义暴露端口 添加配置 ``` vim /etc/kubernetes/manifests/kube-apiserver.yaml ``` ``` ... - --service-cluster-ip-range=10.96.0.0/12 - --service-node-port-range=1-65535 #新增 - --tls-cert-file=/etc/kubernetes/pki/apiserver.crt ... ``` 重启api ``` systemctl daemon-reload systemctl restart kubelet ``` # 开启ipvs模式 修改kube-proxy模式(须安装ipvs模块,否则会降级为iptables) ```asp kubectl edit cm kube-proxy -n kube-system ``` ```asp metricsBindAddress: "" #在""内添加ipvs字段 mode: "ipvs" nodePortAddresses: null ``` 删除老的kube-proxy重新加载 ```asp kubectl delete pod -l k8s-app=kube-proxy -n kube-system ``` 查看ipvs规则 ```asp ipvsadm -Ln ``` # Node 初始化 master节点查看kubeadm加入集群的token信息 ``` kubeadm token create --print-join-command --ttl 0 ``` 在node1和node2执行(token信息是集群初始化之后生成,根据自己集群的输出信息在node节点执行) ```asp kubeadm join 192.168.1.100:6443 --token fgbmpk.p2629mxjy1yd0la5 --discovery-token-ca-cert-hash sha256:31ac28d970a3d6cca982f309e6a07391cf9ea0498de902803717ade45d45e9e2 ``` 查看集群信息(notready状态是网络不通) ``` kubectl get nodes ``` ``` NAME STATUS ROLES AGE VERSION master NotReady master 10m v1.17.4 node1 NotReady <none> 6m11s v1.17.4 node2 NotReady <none> 3m2s v1.17.4 ``` # 集群网络安装 > 在Master节点执行 K8s支持多种网络插件如flannel、calico、canal等(版本对应版本) 安装flannel ``` kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml ``` 查看flannel ``` watch kubectl get pods -n kube-system ``` ``` NAME READY STATUS RESTARTS AGE coredns-558bd4d5db-d8cll 1/1 Running 0 12m coredns-558bd4d5db-dqtw8 1/1 Running 0 12m etcd-jp-master 1/1 Running 0 12m kube-apiserver-jp-master 1/1 Running 0 12m kube-controller-manager-jp-master 1/1 Running 0 12m kube-proxy-5pcm8 1/1 Running 0 12m kube-proxy-zsmc5 1/1 Running 0 12m kube-scheduler-jp-master 1/1 Running 0 12m ``` 删除flannel ``` kubectl delete -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml ``` 安装calico ``` kubectl apply -f https://projectcalico.docs.tigera.io/archive/v3.21/manifests/calico.yaml ``` 查看calico ``` watch kubectl get pods -n kube-system ``` ``` NAME READY STATUS RESTARTS AGE calico-kube-controllers-65f8bc95db-6cm49 1/1 Running 1 3m calico-node-72rtg 1/1 Running 1 3m calico-node-t9rh4 1/1 Running 1 3m calico-node-xc8f9 1/1 Running 1 3m coredns-5dbbf58dbf-czp64 1/1 Running 1 3m coredns-5dbbf58dbf-z85f9 1/1 Running 1 3m etcd-master 1/1 Running 1 3m kube-apiserver-master 1/1 Running 1 3m kube-controller-manager-master 1/1 Running 1 3m kube-proxy-92f5q 1/1 Running 1 3m kube-proxy-j9g64 1/1 Running 1 3m kube-proxy-nbpvh 1/1 Running 1 3m kube-scheduler-master 1/1 Running 1 3m ``` 删除calico ``` kubectl delete -f https://projectcalico.docs.tigera.io/archive/v3.21/manifests/calico.yaml ``` # 查看集群状态 ``` kubectl get nodes ``` ``` NAME STATUS ROLES AGE VERSION jp-master Ready control-plane,master 14m v1.21.0 jp-node1 Ready <none> 13m v1.21.0 ``` # 集群监控安装 > 在Master节点执行 下载metrics-server(v0.3.6) ``` wget https://github.com/kubernetes-sigs/metrics-server/archive/v0.3.6.tar.gz ``` 解压进入目录 ``` tar -zxvf v0.3.6.tar.gz && cd metrics-server-0.3.6/deploy/1.8+/ ``` 修改配置文件 ``` vim metrics-server-deployment.yaml ``` ``` spec: #新增1行 hostNetwork: true serviceAccountName: metrics-server volumes: # mount in tmp so we can safely use from-scratch images and/or read-only containers - name: tmp-dir emptyDir: {} containers: - name: metrics-server #修改为国内镜像源 image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server-amd64:v0.3.6 imagePullPolicy: Always #新增3行 args: - --kubelet-insecure-tls - --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP ``` 部署启动 ``` kubectl create -f ./ ``` 查看服务状态 ``` kubectl get pod -n kube-system ``` ``` NAME READY STATUS RESTARTS AGE ... metrics-server-5f55b696bd-xndm8 1/1 Running 0 60s ``` 查看node资源监控 ``` kubectl top node ``` ``` NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% master 314m 7% 954Mi 25% node1 200m 5% 482Mi 13% node2 241m 6% 492Mi 13% ``` # 集群测试 创建pod容器nginx服务 ``` kubectl create deployment nginx --image=nginx ``` 创建svc暴露nginx对外端口 ``` kubectl expose deployment nginx --port=80 --type=NodePort ``` 查看pod和svc ``` kubectl get deployment,svc nginx ``` ``` NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nginx 1/1 1 1 100s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/nginx NodePort 10.105.117.217 <none> 80:30584/TCP 98s ``` curl验证nginx服务是否正常(集群100、101、102均能访问nginx) ``` curl 192.168.1.100:30735 ```  删除svc ``` kubectl delete svc nginx ``` 删除pod ``` kubectl delete deployment nginx ``` # 节点授权 node节点授权kubectl命令管理集群,将kube文件发送到node1节点 ``` scp -r /root/.kube/ node1:/root/ ``` node1节点执行管理命令生效 ``` kubectl get node ``` # 集群重置 重置集群 ``` kubeadm reset ``` 删除配置文件 ``` rm -rf /root/.kube/ rm -rf /etc/kubernetes/ rm -rf /var/lib/kubelet/ rm -rf /var/lib/dockershim rm -rf /var/run/kubernetes rm -rf /var/lib/cni rm -rf /var/lib/etcd rm -rf /etc/cni/net.d ``` 清理防火墙 ``` iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X ``` # 网络错误 >错误日志: Warning Unhealthy 24s (x196 over 29m) kubelet (combined from similar events): Readiness probe failed: 2024-03-22 02:39:47.813 [INFO][7095] confd/health.go 180: Number of node(s) with BGP peering established = 0 calico/node is not ready: BIRD is not ready: BGP not established with 10.51.10.4,10.51.10.5 修改calico.yaml ``` vim calico.yaml ``` ``` 在650行新添加配置: ---------------------------------------------------------------------- # Cluster type to identify the deployment type - name: CLUSTER_TYPE value: "k8s,bgp" #声明集群网卡所在网卡名(查看自己实际的) - name: IP_AUTODETECTION_METHOD value: "interface=enp0s5" # Auto-detect the BGP IP address. - name: IP ---------------------------------------------------------------------- ``` 重建网络 ``` kubectl apply -f calico.yaml ```

done

2025年2月20日 12:58

转发文档

收藏文档

上一篇

下一篇

手机扫码

复制链接

手机扫一扫转发分享

复制链接

Markdown文件

分享

链接

类型

密码

更新密码